Rekognition is an Amazon service that supports image and video analysis and can be used to identify the content inside such media. It makes use of Amazon’s highly accurate machine learning capabilities – but requires no need of any such knowledge to use. We can simply make use of the Rekognition APIs to provide media and get the results of the analysis pretty quickly. Rekognition provides a list of detected content labels from its standardized category set and also provides a confidence score of the detected label – with a higher score indicating higher probability of the detected label’s accuracy.

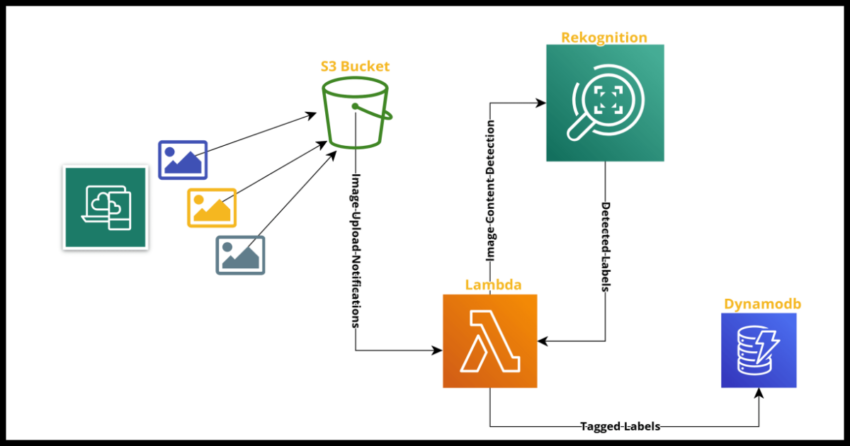

This makes Rekognition a great service to use scanning and tagging media being uploaded to a system for various needs from filtering of types to moderating images based on content. This post will demonstrate how to do just that – build an image detection system that will scan images uploaded to an S3 bucket by using a Python Lambda to invoke Rekognition and run the content detection and save the detected labels for each image in Dynamodb. This system can then be used as the base for your own specific needs from hiding or showing warnings of moderated content in a UI front end or indexing the images for search features or anything else.

We will define the serverless stack using cloudformation and the end result will have a workflow that will look like the feature image above.

Creating an S3 Bucket for the image uploads

We ultimately want to have images uploaded to an S3 bucket be notified to our Rekognition processing Lambda as an event. Since we will be using cloudformation, we do need to define the S3 bucket in the same yaml template as where our Lambda will be defined because AWS SAM Function events cannot be defined in a template otherwise. If this is a contained serverless application, it makes sense to do so anyway – however keep in mind this limitation if you need to work with an existing bucket in an already live system. If you cannot add your Lambda to the template where the bucket was created or if it was done manually – you have no choice but to set up the event notifications via the console. We will be getting to that when we create the Lambda. For now lets start our yaml with a simple bucket definition.

AWSTemplateFormatVersion: '2010-09-09'

Transform: AWS::Serverless-2016-10-31

Description: Image Content Detection with AWS Rekognition and Python Lambda

Resources:

ImagesBucket:

Type: AWS::S3::Bucket

Properties:

BucketName: !Sub "images-bucket-${AWS::Region}-${AWS::AccountId}"If you are wondering why I have the above pattern for the bucket name – it is because bucket names are global and if we want to reuse this stack in another region it makes sense to add some kind of identifier as a prefix or suffix when creating s3 buckets.

If you are new to using Cloudformation, you might find these previous posts of mine useful as a reference:

How to deploy a cloudformation template with AWS SAM

How to build and deploy a Python Lambda with Cloudformation and AWS SAM

Creating a Dynamodb Table to store the detected labels

We will create a simple table with the image key as the dynamodb primary key and the label name as the sort key. This is sufficient for the base filtering case of getting all the labels for an image or filtering by specific labels and can be built out to support other access patterns as your specific application requires.

ImageLabelsTable:

Type: AWS::DynamoDB::Table

Properties:

TableName: image-labels-table

BillingMode: PAY_PER_REQUEST

AttributeDefinitions:

- AttributeName: image_key

AttributeType: S

- AttributeName: image_label

AttributeType: S

KeySchema:

- AttributeName: image_key

KeyType: HASH

- AttributeName: image_label

KeyType: RANGEBuilding the Rekognition Lambda

Now for the heart of our application. We want our Lambda to receive s3 upload notifications. We will then supply the uploaded image location to Rekognition using the boto3 SDK and let Rekognition do its AI magic and return us the content labels which we will save in our dynamodb table. We will cover the python code after walking through the yaml definition.

RekognitionLambda:

Type: AWS::Serverless::Function

Properties:

Architectures:

- arm64

Runtime: python3.9

CodeUri: ./rekognition-lambda/

Handler: rekognition-lambda.event_handler

Description: Scan and detect uploaded images using AWS Rekognition

FunctionName: rekognition-lambda

Environment:

Variables:

IMAGE_LABELS_TABLE: !Ref ImageLabelsTable

Policies:

- Statement:

- Effect: Allow

Action:

- "rekognition:*"

Resource:

- "*"

- Statement:

- Effect: Allow

Action: s3:GetObject*

Resource:

- !Sub "arn:aws:s3:::images-bucket-${AWS::Region}-${AWS::AccountId}"

- !Sub "arn:aws:s3:::images-bucket-${AWS::Region}-${AWS::AccountId}/*"

- DynamoDBCrudPolicy:

TableName:

Ref: ImageLabelsTable

Events:

S3Event:

Type: S3

Properties:

Bucket:

Ref: ImagesBucket

Events: s3:ObjectCreated:*A lot going on there but quite simple really. We have defined our Lambda indicating where the code is going to be located. We have 3 IAM policies necessary for the Lambda to operate – it needs access to AWS Rekognition, it needs access to the s3 bucket and all content in it and it needs access to the Dynamodb table to write the labels to. And we have an S3 Event type to receive “ObjectCreated” – file uploaded really – events to the Lambda. Now lets walk through the Python code.

import os

import boto3

import urllib.parse

rekognition_client = boto3.client('rekognition')

dynamodb = boto3.resource("dynamodb")

image_labels_table = dynamodb.Table(os.environ["IMAGE_LABELS_TABLE"])

def event_handler(event, context):

bucket = event['Records'][0]['s3']['bucket']['name']

key = urllib.parse.unquote_plus(event['Records'][0]['s3']['object']['key'], encoding='utf-8')

response = rekognition_client.detect_moderation_labels(

Image={'S3Object': {'Bucket': bucket, 'Name': key}})

for label in response['ModerationLabels']:

parent_label = label["ParentName"]

confidence = float(label['Confidence'])

label = label["Name"]

image_labels_table.put_item(Item={

"image_key": key,

"image_label": label,

"parent_label": parent_label,

"confidence": confidence

})

This one is much more straightforward – and aptly demonstrates how simple it is to use Rekognition. Simply invoke the Rekognition service with the image details in S3 as coded above and work with the Rekognition response.

As mentioned earlier, Rekognition provides a “ParentName” – which is a higher level category and the specific label it detected along with a confidence score. In an actual application, you will need to work with thresholds of that confidence score depending on your use case. If for instance it is extremely important to filter out all inappropriate content (what is inappropriate is again up to the use case) even at the expense of filtering out some images that may not really be inappropriate, go with a lower threshold of the confidence score – like 50%. On the other hand, if you only need to tag images containing say mountains that definitely have them, go with a higher confidence threshold of 80%.

The last bit of the code is saving the labels to Dynamodb.

Concluding

This is a baseline project which can be extended or modified and then integrated with other parts of your application. One thing not covered here is handling video – this requires more wiring as video analysis is job based and asynchronous. I will cover that in a future post.

Hope you found this useful and feel free to reach out via the contact form for any questions.